Apple’s new BCI protocol enables users to control iPhones and iPads using only their thoughts. This breakthrough brain-computer interface integrates with iOS and iPadOS, powered by Synchron’s Stentrode implant. It marks a major leap forward in accessibility and hands-free technology.

KumDi.com

Apple has unveiled its cutting-edge brain-controlled iPhone and iPad experience through a powerful new Brain-Computer Interface (BCI) protocol. This pioneering technology, integrated directly into iOS and iPadOS, allows users to operate their devices using only their thoughts. Enabled by Synchron’s Stentrode implant, Apple’s BCI innovation reshapes accessibility and human-device interaction for the future.

Table of Contents

In a major technological breakthrough, Apple has unveiled its new Brain-Computer Interface (BCI) Human Interface Device (HID) protocol, enabling users to control iPhones and iPads directly with their thoughts. This revolutionary development is being pioneered through a partnership with Synchron, a neurotechnology company known for its minimally invasive brain implants. The integration of BCI support into iOS, iPadOS, macOS, and visionOS devices marks a significant step toward fully native thought-controlled computing, opening new possibilities for accessibility, healthcare, and human-machine interaction.

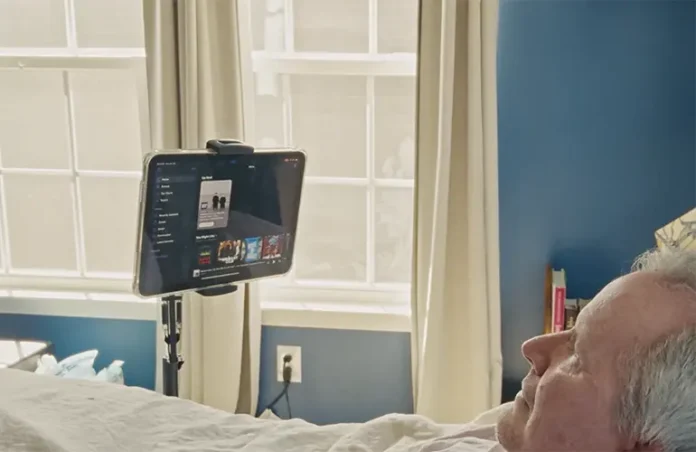

Synchron Demonstrates First Native Thought-Controlled iPad Use

The world got its first public look at this innovation when a patient living with ALS, identified as Mark, successfully navigated an iPad using only his mind. Through Synchron’s Stentrode implant, which is inserted via blood vessels near the motor cortex, Mark was able to open apps, move the cursor, and write messages on an iPad without any physical movement. Unlike prior third-party BCI systems that relied on external apps or clunky setups, Apple’s native HID support allows real-time translation of motor intent brain signals into seamless user interface interactions.

How Apple’s BCI HID Protocol Works

Apple’s BCI HID protocol allows brain-signal decoding devices to communicate natively with Apple products as if they were physical peripherals like mice or keyboards. The Synchron system reads signals related to movement intention and transmits them wirelessly to a decoder. The decoded signal then connects to the iPad or iPhone via Bluetooth, enabling fluid, low-latency control. This integration doesn’t require any custom apps or software layers; instead, Apple’s operating systems interpret the BCI input as standard human interaction, ensuring a stable and intuitive user experience across devices.

Accessibility and Clinical Significance

This innovation represents a critical advancement for individuals with paralysis or neurodegenerative diseases. For those who have lost control over their bodies but retain cognitive ability, the ability to communicate and interact digitally through thought alone restores autonomy and dignity. Clinical trials have shown that even with relatively slow input speeds, users experience significant improvement in digital participation. Synchron’s system, approved for investigational use by the FDA, has already been implanted in multiple patients in the United States and Australia, marking the beginning of a new era in assistive technology.

Integration Across the Apple Ecosystem in 2025

Apple plans to release broader developer tools and platform updates in late 2025 that will expand BCI HID support across iOS 26, iPadOS 26, macOS, and visionOS. Developers will gain access to new input pathways, enabling the creation of apps and experiences tailored for users with brain-based control systems. Apple’s strategy focuses on making BCI an embedded, accessible, and universal input method—much like touch, voice, or eye tracking—rather than a niche technology.

Neurotechnology and the Future of Health Innovation

The rise of brain-controlled interfaces aligns with broader health technology trends. Artificial intelligence is being rapidly adopted in diagnostic medicine, with recent developments such as Mayo Clinic’s AI-powered dementia detection tool that distinguishes between multiple types of dementia through advanced scan analysis. At the same time, global health leaders, including figures like Bill Gates, are increasing investments in women’s health and vaccine access, recognizing the intersection of innovation, equity, and longevity. These efforts emphasize that future healthcare will be deeply interconnected with AI, biotech, and digital systems, much like Apple’s BCI-enabled devices.

Human-Machine Interfaces Become Personal

The ability to control a smartphone or tablet with a thought is no longer science fiction. Apple and Synchron have demonstrated that deeply integrated BCI systems can become a practical reality for everyday users. This is not only a triumph of engineering but also a signal that human-computer interfaces are entering a new paradigm—one where the boundary between mind and machine is nearly invisible. As the technology matures and becomes more widely accessible, it will change how we think about interaction, agency, and what it means to be digitally present.

FAQs

How does Apple’s brain-controlled iPhone and iPad technology work?

Apple’s brain-controlled iPhone and iPad use a BCI protocol to interpret brain signals via Synchron’s Stentrode implant, allowing thought-based control of Apple devices.

What is Apple’s new BCI protocol?

Apple’s BCI (Brain-Computer Interface) protocol is a native input system that enables hands-free, mind-controlled operation of iPhones, iPads, and other Apple devices.

Who benefits from Apple’s mind-controlled iPad?

Individuals with mobility challenges, such as ALS patients, benefit the most from Apple’s mind-controlled iPad, gaining independence through BCI-powered accessibility tools.

Is the brain-controlled iPad available now?

The brain-controlled iPad experience is currently in clinical use with Synchron’s trial participants. Wider rollout is expected with iOS and iPadOS updates later in 2025.

What makes Apple’s BCI different from other thought-controlled devices?

Apple’s BCI protocol offers native, low-latency integration into its ecosystem, unlike external apps. It ensures seamless, real-time thought-to-action performance on iPhones and iPads.