AI itself is not inherently invading our privacy, but the way AI systems collect, analyze, and infer personal data can create serious privacy risks. Without strong regulation and safeguards, AI can enable large-scale surveillance, profiling, and data misuse across digital platforms.

KumDi.com

Is AI invading our privacy, or is the concern being overstated? As artificial intelligence increasingly relies on massive datasets, the real issue lies not in AI technology itself but in how personal data is collected, inferred, and governed. Understanding AI privacy risks is essential for individuals, businesses, and policymakers in 2026.

To understand the issue clearly, we must define privacy in technical—not emotional—terms.

In modern data governance, privacy includes:

- Data collection limits (what data is gathered)

- Purpose limitation (why it is used)

- Data minimization (how much is needed)

- User consent and control

- Protection against re-identification

- Protection from misuse, inference, or profiling

AI systems challenge privacy not because they “think,” but because they infer.

Key distinction:

Traditional software stores data.

AI extracts patterns, predictions, and latent traits from data.

Table of Contents

How AI Systems Interact With Personal Data

1. Data Collection at Scale

AI systems are trained using:

- Search queries

- Voice recordings

- Images and facial data

- Location history

- Health and biometric data

- Browsing and purchasing behavior

- Workplace and productivity data

In real-world deployments (healthcare, finance, HR, marketing), these datasets often include indirect identifiers—data points that seem anonymous but can be recombined.

Example (Healthcare AI):

Clinical decision-support tools trained on “de-identified” patient data have repeatedly been shown to allow re-identification when combined with external datasets (dates, ZIP codes, rare conditions).

2. Inference: The Hidden Privacy Risk

One of the most misunderstood aspects of AI privacy is inference.

AI can infer:

- Mental health status

- Pregnancy

- Political orientation

- Sexual orientation

- Income level

- Disease risk

- Cognitive decline

Even when users never explicitly share this information.

📌 This is called “derived data”, and under many legal frameworks, it remains weakly regulated.

Privacy harm increasingly comes not from what you share—but from what AI predicts about you.

3. Continuous Surveillance via “Smart” Systems

AI is embedded in:

- Smartphones

- Smart homes

- Cars

- Wearables

- Public cameras

- Workplace monitoring tools

These systems often operate passively, collecting behavioral data continuously.

Workplace example:

AI productivity tools used in remote work environments can monitor keystrokes, facial expressions, voice tone, and attention patterns—creating behavioral surveillance far beyond traditional performance metrics.

Is AI the Problem—or How We Use It?

From an expert standpoint, AI is a force multiplier.

The real drivers of privacy erosion are:

- Business models based on behavioral advertising

- Weak enforcement of data protection laws

- Lack of algorithmic transparency

- Poor consent design (dark patterns)

- Data brokers and secondary data markets

AI accelerates these issues by:

- Making surveillance cheaper

- Making profiling more accurate

- Making decisions less explainable

Real-World Cases That Raised Privacy Alarms

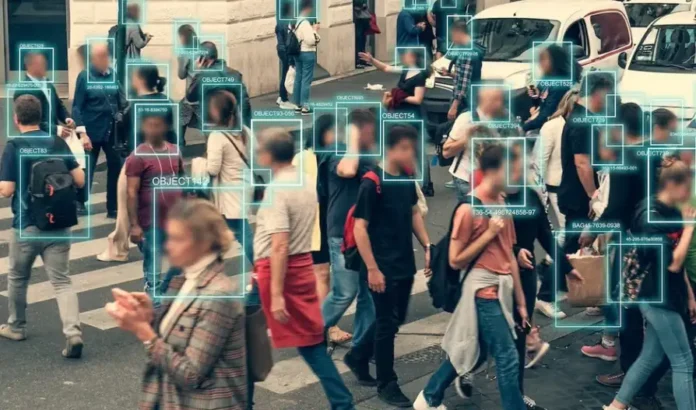

Case 1: Facial Recognition in Public Spaces

By 2024–2025, multiple cities worldwide suspended or restricted facial recognition after audits revealed:

- Racial bias

- Misidentification

- Lack of public consent

- Function creep (law enforcement → commercial use)

As of 2026, many jurisdictions require explicit necessity tests and human oversight before deployment.

Case 2: AI Health Apps and Sensitive Inference

Mental health and fertility apps using AI analytics were found to:

- Share inferred health data with advertisers

- Label users into risk categories without transparency

- Retain data beyond stated purposes

This led to stricter enforcement under GDPR, HIPAA expansions, and new AI-specific health regulations.

Case 3: Generative AI Training Data Concerns

Large language models raised concerns about:

- Training on copyrighted or personal data

- Memorization of sensitive information

- Outputting private details under rare prompts

By 2026, model training audits, data provenance tracking, and red-team testing have become standard for responsible providers.

What Global Regulations Say (As of 2026)

European Union: AI Act + GDPR

The EU AI Act classifies systems by risk:

- Unacceptable risk: banned (social scoring, certain biometric surveillance)

- High risk: strict compliance (health, hiring, credit)

- Limited risk: transparency requirements

- Minimal risk: voluntary codes

Privacy protection is embedded through:

- Mandatory impact assessments

- Human oversight

- Data governance obligations

United States: Sector-Based but Strengthening

The U.S. still lacks a single federal privacy law, but:

- State laws (CPRA, VCDPA, CPA) regulate AI profiling

- FTC actively enforces against deceptive AI practices

- Health and biometric data face tighter scrutiny

Asia-Pacific

- Japan and South Korea emphasize human-centric AI

- Singapore enforces explainability and consent standards

- China tightly controls AI deployment—but for state interests rather than personal privacy

How Responsible AI Protects Privacy in Practice

Based on real deployments in healthcare, finance, and enterprise systems, privacy-preserving AI is achievable when designed correctly.

Key Safeguards Used in 2026

1. Privacy-by-Design Architecture

- Data minimization at ingestion

- Purpose-limited pipelines

- Strict access control

2. Differential Privacy

- Statistical noise added to datasets

- Prevents re-identification

- Widely used in public health and census AI

3. Federated Learning

- Models trained locally on devices

- Raw data never leaves user control

- Common in medical imaging and keyboards

4. Model Governance & Auditing

- Training data documentation

- Bias and privacy testing

- Incident response plans

📊 Suggested visual aid:

A diagram comparing centralized vs federated learning data flows

What Individuals Can Realistically Do

While systemic solutions matter most, individuals still have leverage:

- Review AI permissions in apps and devices

- Limit unnecessary data sharing

- Use privacy dashboards and opt-outs

- Choose services with transparent AI policies

- Understand that “free” often means “data-funded”

However, privacy should not rely solely on user vigilance—this is a policy failure, not a personal one.

Is AI Privacy Risk Inevitable?

No. But unmanaged AI risk is.

History shows that:

- New technologies initially outpace regulation

- Harm occurs during the gap

- Standards eventually stabilize usage

AI is currently in that transitional phase.

The question is no longer “Is AI invading privacy?”

The real question is:

Will societies enforce limits before invasive uses become normalized?

Expert Conclusion: A Balanced Reality Check

AI is not a silent spy by default.

It becomes invasive when economic incentives, weak governance, and opaque design align.

As of 2026:

- The risks are real

- The solutions exist

- Enforcement is uneven

- Public understanding is improving

Privacy-respecting AI is not only possible—it is already being deployed in regulated sectors.

The next decade will determine whether those standards become the norm—or the exception.

FAQs

Is AI invading our privacy in everyday life?

AI is not automatically invading privacy, but AI data privacy risks arise when systems collect behavioral data, infer sensitive traits, or enable surveillance without transparency or consent.

How does artificial intelligence surveillance affect personal data?

Artificial intelligence surveillance can track location, behavior, and preferences at scale, increasing the risk of profiling and misuse if AI privacy safeguards are not properly enforced.

What are the biggest AI data privacy risks in 2026?

The main AI data privacy risks include unauthorized data sharing, re-identification of anonymized data, behavioral inference, and lack of user control over AI-driven decisions.

Are AI privacy safeguards improving globally?

Yes. Governments and organizations are strengthening AI privacy safeguards through regulations like the EU AI Act, privacy-by-design systems, and data minimization standards.

How can individuals protect themselves from AI privacy risks?

Users can reduce AI privacy risks by limiting data sharing, reviewing app permissions, choosing transparent platforms, and supporting stronger artificial intelligence privacy regulations.